AN AR-BASED VIDEO CONFERENCING SYSTEM

FocalSpace introduces a video conferencing system that dynamically recognizes relevant activities and objects through depth sensing and hybrid tracking of multimodal cues, such as voice, gesture, and proximity to surfaces. FocalSpace uses this information to enhance users’ focus by diminishing the background through synthetic blur effects.

Date: February 2012

Duration: 3 months

Role: Interaction design, Kinect development

only on what matters in a conference

We decided to use both Kinects and Webcams to achieve the desired effect. The first step was to roughly sketch out how the overall system should work.

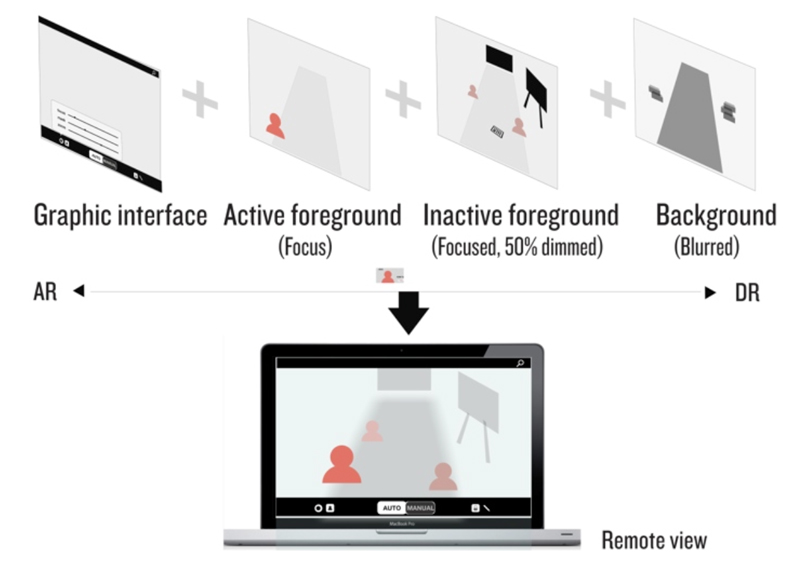

FocalSpace is a rather complicated system. Once we have the idea and overall design, we started breaking down the system to different layers for separate implementation.

Decomposing the system into four layers

... Everyone designs who devises courses of action aimed at changing existing situations into preferred ones. - Herbert Simon

By observing the space we inhabit as a richly layered, semantic object, FocalSpace can be valuable tool for other applications domains beyond video conferencing system. For future work, we consider adding eye-tracking as an additional feature, where focusing follows a user's gaze.